The design of a vision system starts with the selection an image sensor. One of the main characteristics of an image sensor is its PLS value. How much influence PLS has on your system depends on the type of measurements you want to do.

PLS stands for Parasitic Light Sensitivity, literally meaning that light parasitically affects the sensor. PLS is a characteristic of the sensor: in fact, it is a measure of the global shutter efficiency. So, if the same sensor is used, these specifications will remain the same. As an example, table 1 shows the PLS values for several Adimec cameras as defined by their image sensors. As you can see, the PLS value varies greatly between the image sensors.

Let’s take a look at what the PLS value means. A PLS value of less than 1/700 means that 1 out of 700 photons parasitically affects the sensor during readout. The higher the PLS value, the higher the parasitic effect on your image.

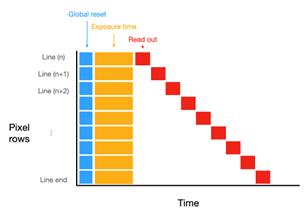

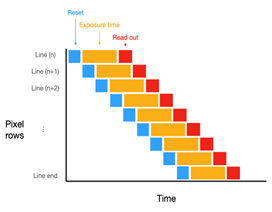

To understand the PLS effect during sensor readout, we will first look at the shutter method. Whereas the rolling shutter continuously reads out the sensor row by row, the global shutter uses a memory node to store charge before the frame is readout all at once. PLS is caused by leakage from the photodiode to the memory node.

Figure 1: The readout scheme for a rolling shutter sensor (left) and global shutter sensor (right).

With a rolling shutter PLS does not occur because the readout of each line proceeds sequentially with reset, exposure, and readout. There is no delay between the end of exposure and the start of readout, as you can see in Figure 1 on the left.

Rolling shutters are primarily used in high-resolution applications. However, they can show the “jello effect” when capturing moving objects. This is why most machine vision applications use global shutters.

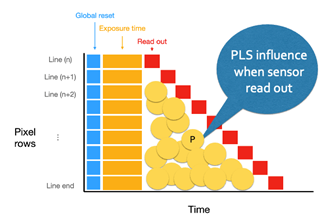

With a global shutter, both reset and exposure are done simultaneously for all lines, followed by a sequential readout. As shown in Figure 1 on the right, as the line count increases, the time delay between the end of exposure and sart of readout becomes more significant. When the video level of a line of pixels is read out, you only want to capture the photons that enter the sensor during the exposure time. However, as can be seen in Figure 2, photons can still influence the sensor in the time frame between the the end of exposure and the start of readout. These unwanted, parasitic photons are also collected by the pixels and read out. If there are no objects present, these photons gradually have a more visible impact on the bottom lines (if the sensor is read out from top to bottom.)

Figure 2: Parasitic photons are collected between end of exposure and start of readout (left). This effect is more visible in the bottom lines (right).

The effect of PLS on the image is linear as the number of lines that are read out increases. However, if there is an object present, the object will block a part of the photons and the PLS effect varies depending on the shape of the object. The object in the current frame can become slightly visible in the readout image of the previous frame.

The same effect happens when different color light sources or different light angles are used between consecutive frames. Through PLS the current light source affects the image readout of the previous frame resulting in, for example, overexposed parts of the image.

If PLS would have a linear effect on the image, you could create a correction algorithm. However, because objects influence the image readout of the previous frame, this is not possible. From the camera’s perspective, it cannot predict the objects in the next frame, making it impossible to predict the PLS effect and correct it.

Thus, PLS is determined by the sensor and cannot be corrected by the camera. In our next blog we will discuss system design considerations to reduce the effect of PLS on your application.

“Read about more sensor characteristics on this page(link to https://www.adimec.com/resources/sensor-characteristics/)”

English

English 日本語

日本語 简体中文

简体中文